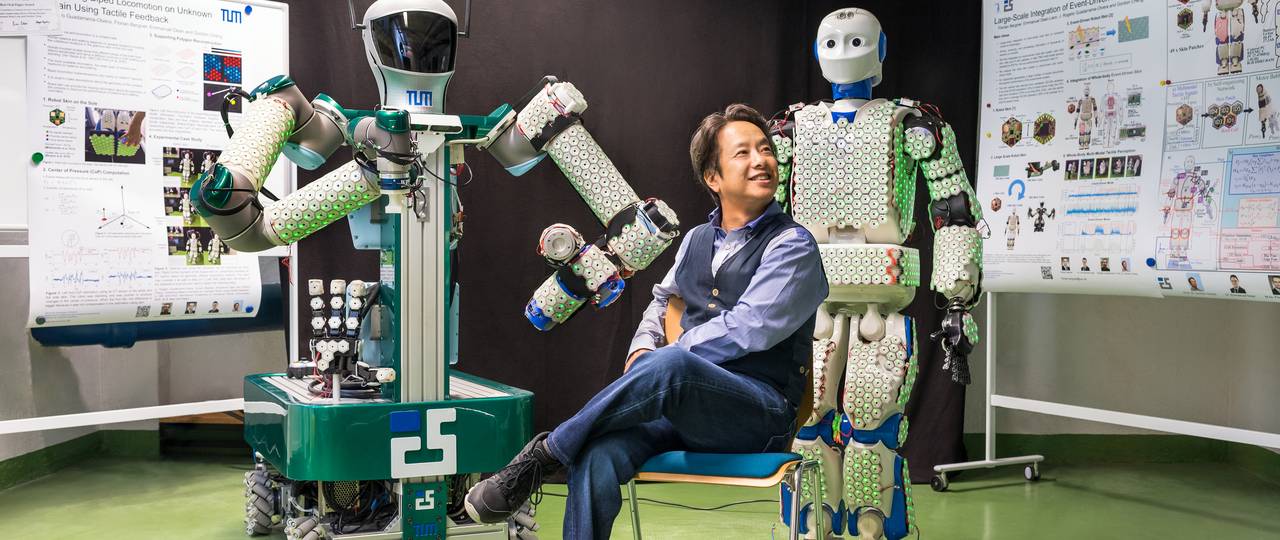

Gordon Cheng, Professor for Cognitive Systems, wants to dig deeper in understanding how the brain works.

Image: Astrid Eckert / TUM

Prof. Gordon Cheng on the challenges of fusing robotics and neuroscience

Combining neuroscience and robotic research has gained impressive results in the rehabilitation of paraplegic patients. A research team led by Prof. Gordon Cheng from the Technical University of Munich (TUM) was able to show that exoskeleton training not only helped patients to walk, but also stimulated their healing process. With these findings in mind, Prof. Cheng wants to take the fusion of robotics and neuroscience to the next level.

Prof. Cheng, by training a paraplegic patient with the exoskeleton within your sensational study under the “Walk Again” project, you found that patients regained a certain degree of control over the movement of their legs. Back then, this came as a complete surprise to you …… and it somehow still is. Even though we had this breakthrough four years ago, this was only the beginning. To my regret, none of these patients is walking around freely and unaided yet. We have only touched the tip of the iceberg. To develop better medical devices, we need to dig deeper in understanding how the brain works and how to translate this into robotics.

In your paper published in “Science Robotics” this month, you and your colleague Prof. Nicolelis, a leading expert in neuroscience and in particular in the area of the human-machine interface, argue that some key challenges in the fusion of neuroscience and robotics need to be overcome in order to take the next steps. One of them is to “close the loop between the brain and the machine” – what do you mean by that?

The idea behind this is that the coupling between the brain and the machine should work in a way where the brain thinks of the machine as an extension of the body. Let’s take driving as an example. While driving a car, you don’t think about your moves, do you? But we still don’t know how this really works. My theory is that the brain somehow adapts to the car as if it is a part of the body. With this general idea in mind, it would be great to have an exoskeleton that would be embraced by the brain in the same way.

How could this be achieved in practice?The exoskeleton that we were using for our research so far is actually just a big chunk of metal and thus rather cumbersome for the wearer. I want to develop a “soft” exoskeleton – something that you can just wear like a piece of clothing that can both sense the user’s movement intentions and provide instantaneous feedback. Integrating this with recent advances in brain-machine interfaces that allow real-time measurement of brain responses enables the seamless adaptation of such exoskeletons to the needs of individual users.

Given the recent technological advances and better understanding of how to decode the user’s momentary brain activity, the time is ripe for their integration into more human-centered or, better ? brain-centered ? solutions.What other pieces are still missing? You talked about providing a “more realistic functional model” for both disciplines.We have to facilitate the transfer through new developments, for example robots that are closer to human behaviour and the construction of the human body and thus lower the threshold for the use of robots in neuroscience. This is why we need more realistic functional models, which means that robots should be able to mimic human characteristics. Let’s take the example of a humanoid robot actuated with artificial muscles.

This natural construction mimicking muscles instead of the traditional motorized actuation would provide neuroscientists with a more realistic model for their studies. We think of this as a win-win situation to facilitate better cooperation between neuroscience and robotics in the future.You are not alone in the mission of overcoming these challenges. In your Elite Graduate Program in Neuroengineering, the first and only one of its kind in Germany combining experimental and theoretical neuroscience with in-depth training in engineering, you are bringing together the best students in the field.

As described above, combining the two disciplines of robotics and neuroscience is a tough exercise, and therefore one of the main reasons why I created this master’s program in Munich. To me, it is important to teach the students to think more broadly and across disciplines, to find previously unimagined solutions. This is why lecturers from various fields, for example hospitals or the sports department, are teaching our students. We need to create a new community and a new culture in the field of engineering. From my standpoint, education is the key factor.

The Latest Updates from Bing News & Google News

Go deeper with Bing News on:

Robotics and neuroscience

- Scientists Make Breakthrough in Chronic Pain Treatment

Scientists have developed tiny robotic nerve "cuffs" to diagnose and treat neurological disorders. The flexible devices offer a safer, minimally invasive alternative to today's diagnostics and could ...

- Robotic nerve 'cuffs' could help treat a range of neurological conditions

Researchers have developed tiny, flexible devices that can wrap around individual nerve fibers without damaging them.

- Estimating Brain Age with an Affordable EEG Headset

Researchers, under the direction of Drexel University’s College of Arts and Sciences professor John Kounios, PhD, and director of the Creativity Research Lab, have developed an artificial intelligence ...

- New staffing updates to the Short Wave team

Congratulations to Berly McCoy and Rachel Carlson for becomming permanent producers and Regina G. Barber for becomming full time co-host!

- All-Delco Hi-Q team features some already significant resumes

Scott’s Hi-Q launched in fall 1948, testing the mettle of county high schools’ best and brightest on the competition stage. Seventy-six years later, it stands as the oldest continuously running ...

Go deeper with Google Headlines on:

Robotics and neuroscience

[google_news title=”” keyword=”robotics and neuroscience” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]

Go deeper with Bing News on:

Human-machine interface

- 2024 Hyundai Kona: Will It Dog?

Hyundai Kona is a huge improvement over the last model. It's not the best for dog transport, but it has some utility.

- Transforming The Customer Experience Into The “Human Experience”

Customer experience will be replaced with the higher concept we called, The Human Experience. CEOs of AARP, Audien, Xerox, ESG News Corp ...

- China's homegrown brain-machine interface system unveiled at Zhongguancun Forum

This photo shows the NeuCyber Array BMI System, a self-developed brain-machine interface (BMI) system from China, unveiled at the opening ceremony of the 2024 Zhongguancun Forum in Beijing, capital of ...

- Mastering AI Powerhouse: Unleashing C++ for Machine Learning and AI Programming

Why is C++ the preferred language for AI development? Explore emerging trends, essential tools, and prospects within this dynamic landscape.

- Brain Computer Interface Market Deciphering Consumer Decision-Making the Role of Ethnography Techniques

Request To Download Free Sample of This Strategic Report @- Brain Computer Interface Market is valued approximately at USD $ billion in 2019 and is anticipated to grow with a healthy growth rate of ...

Go deeper with Google Headlines on:

Human-machine interface

[google_news title=”” keyword=”human-machine interface” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]