Imagine you are in charge of the switch on a trolley track.

The express is due any minute; but as you glance down the line you see a school bus, filled with children, stalled at the level crossing. No problem; that’s why you have this switch. But on the alternate track there’s more trouble: Your child, who has come to work with you, has fallen down on the rails and can’t get up. That switch can save your child or a bus-full of others, but not both. What do you do?

This ethical puzzler is commonly known as the Trolley Problem. It’s a standard topic in philosophy and ethics classes, because your answer says a lot about how you view the world. But in a very 21st century take, several writers (here and here, for example) have adapted the scenario to a modern obsession: autonomous vehicles. Google’s self-driving cars have already driven 1.7 million miles on American roads, and have never been the cause of an accident during that time, the company says. Volvo says it will have a self-driving model on Swedish highways by 2017. Elon Musk says the technology is so close that he can have current-model Teslas ready to take the wheel on “major roads” by this summer.

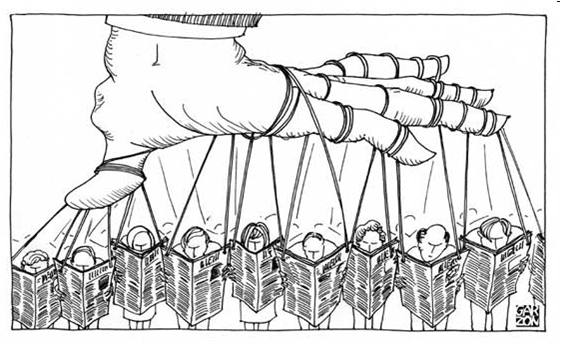

Who watches the watchers?

The technology may have arrived, but are we ready?

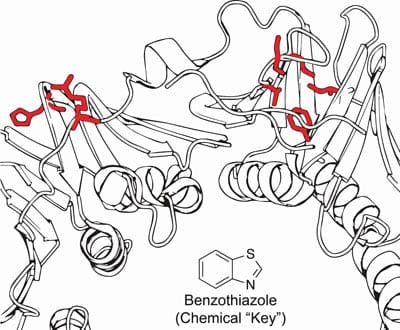

Google’s cars can already handle real-world hazards, such as cars’ suddenly swerving in front of them. But in some situations, a crash is unavoidable. (In fact, Google’s cars have been in dozens of minor accidents, all of which the company blames on human drivers.) How will a Google car, or an ultra-safe Volvo, be programmed to handle a no-win situation — a blown tire, perhaps — where it must choose between swerving into oncoming traffic or steering directly into a retaining wall? The computers will certainly be fast enough to make a reasoned judgment within milliseconds. They would have time to scan the cars ahead and identify the one most likely to survive a collision, for example, or the one with the most other humans inside. But should they be programmed to make the decision that is best for their owners? Or the choice that does the least harm — even if that means choosing to slam into a retaining wall to avoid hitting an oncoming school bus? Who will make that call, and how will they decide?

“Ultimately, this problem devolves into a choice between utilitarianism and deontology,” said UAB alumnus Ameen Barghi. Barghi, who graduated in May and is headed to Oxford University this fall as UAB’s third Rhodes Scholar, is no stranger to moral dilemmas. He was a senior leader on UAB’s Bioethics Bowl team, which won the 2015 national championship. Their winning debates included such topics as the use of clinical trials for Ebola virus, and the ethics of a hypothetical drug that could make people fall in love with each other. In last year’s Ethics Bowl competition, the team argued another provocative question related to autonomous vehicles: If they turn out to be far safer than regular cars, would the government be justified in banning human driving completely? (Their answer, in a nutshell: yes.)

Death in the driver’s seat

So should your self-driving car be programmed to kill you in order to save others?

Read more: Will your self-driving car be programmed to kill you?

The Latest on: Artificial intelligence ethics

[google_news title=”” keyword=”Artificial intelligence ethics” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Artificial intelligence ethics

- Algorithms to Intelligence: Navigating AI Developmenton April 26, 2024 at 5:28 am

On Monday, April 15, the DePauw community explored the ethical facets around the development of artificial intelligence (AI) through an intriguing discussion titled "Algorithms to Intelligence: Navigating AI Development" hosted by the Prindle Institute for Ethics.

- The AI Conscience: Leading Ethical Decision-Making In The Artificial Intelligence Ageon April 24, 2024 at 4:00 am

The march of artificial intelligence (AI) is transforming businesses worldwide. As AI becomes more influential, leaders face the challenge of making ethical decisions in uncharted territories. As these technologies assume roles in autonomous operations,

via Bing News