Image: Kalyan Veeramachaneni/MIT CSAIL

Virtual artificial intelligence analyst developed by the Computer Science and Artificial Intelligence Lab and PatternEx reduces false positives by factor of 5

Today’s security systems usually fall into one of two categories: human or machine. So-called “analyst-driven solutions” rely on rules created by living experts and therefore miss any attacks that don’t match the rules. Meanwhile, today’s machine-learning approaches rely on “anomaly detection,” which tends to trigger false positives that both create distrust of the system and end up having to be investigated by humans, anyway.

But what if there were a solution that could merge those two worlds? What would it look like?

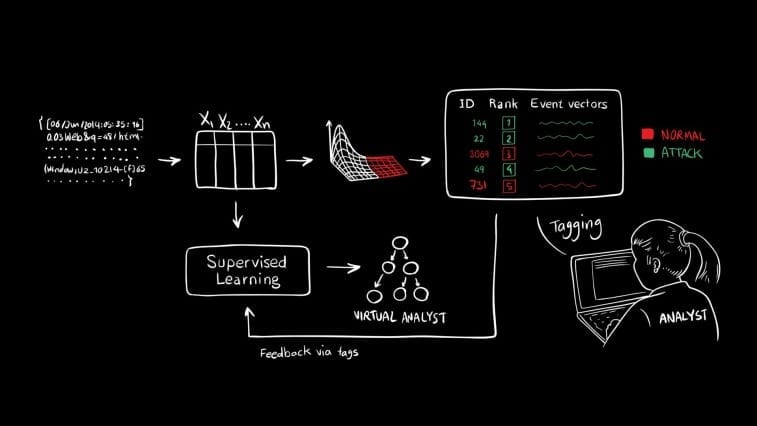

In a new paper, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory(CSAIL) and the machine-learning startup PatternEx demonstrate an artificial intelligence platform called AI2 that predicts cyber-attacks significantly better than existing systems by continuously incorporating input from human experts. (The name comes from merging artificial intelligence with what the researchers call “analyst intuition.”)

The team showed that AI2 can detect 85 percent of attacks, which is roughly three times better than previous benchmarks, while also reducing the number of false positives by a factor of 5. The system was tested on 3.6 billion pieces of data known as “log lines,” which were generated by millions of users over a period of three months.

To predict attacks, AI2 combs through data and detects suspicious activity by clustering the data into meaningful patterns using unsupervised machine-learning. It then presents this activity to human analysts who confirm which events are actual attacks, and incorporates that feedback into its models for the next set of data.

“You can think about the system as a virtual analyst,” says CSAIL research scientist Kalyan Veeramachaneni, who developed AI2 with Ignacio Arnaldo, a chief data scientist at PatternEx and a former CSAIL postdoc. “It continuously generates new models that it can refine in as little as a few hours, meaning it can improve its detection rates significantly and rapidly.”

Veeramachaneni presented a paper about the system at last week’s IEEE International Conference on Big Data Security in New York City.

Creating cybersecurity systems that merge human- and computer-based approaches is tricky, partly because of the challenge of manually labeling cybersecurity data for the algorithms.

For example, let’s say you want to develop a computer-vision algorithm that can identify objects with high accuracy. Labeling data for that is simple: Just enlist a few human volunteers to label photos as either “objects” or “non-objects,” and feed that data into the algorithm.

But for a cybersecurity task, the average person on a crowdsourcing site like Amazon Mechanical Turk simply doesn’t have the skillset to apply labels like “DDOS” or “exfiltration attacks,” says Veeramachaneni. “You need security experts.”

That opens up another problem: Experts are busy, and they can’t spend all day reviewing reams of data that have been flagged as suspicious. Companies have been known to give up on platforms that are too much work, so an effective machine-learning system has to be able to improve itself without overwhelming its human overlords.

AI2’s secret weapon is that it fuses together three different unsupervised-learning methods, and then shows the top events to analysts for them to label. It then builds a supervised model that it can constantly refine through what the team calls a “continuous active learning system.”

Specifically, on day one of its training, AI2 picks the 200 most abnormal events and gives them to the expert. As it improves over time, it identifies more and more of the events as actual attacks, meaning that in a matter of days the analyst may only be looking at 30 or 40 events a day.

“This paper brings together the strengths of analyst intuition and machine learning, and ultimately drives down both false positives and false negatives,” says Nitesh Chawla, the Frank M. Freimann Professor of Computer Science at the University of Notre Dame. “This research has the potential to become a line of defense against attacks such as fraud, service abuse and account takeover, which are major challenges faced by consumer-facing systems.”

The team says that AI2 can scale to billions of log lines per day, transforming the pieces of data on a minute-by-minute basis into different “features”, or discrete types of behavior that are eventually deemed “normal” or “abnormal.”

“The more attacks the system detects, the more analyst feedback it receives, which, in turn, improves the accuracy of future predictions,” Veeramachaneni says. “That human-machine interaction creates a beautiful, cascading effect.”

Learn more: System predicts 85 percent of cyber-attacks using input from human experts

The Latest on: AI2

[google_news title=”” keyword=”AI2″ num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: AI2

- Office of Enterprise Managementon April 24, 2024 at 5:00 pm

Army Ideas for Innovation (AI2) AI2 supports the Army Innovation Strategy by collecting innovative ideas from across the force and presenting them to experts for consideration and implementation.

- Apple releases OpenELM: small, open source AI models designed to run on-deviceon April 24, 2024 at 4:04 pm

In terms of performance, the OpenLLM results shared by Apple show that the new models perform fairly well, especially the one with 3 billion parameters.

- Too many modelson April 19, 2024 at 2:13 pm

Other large language models like LLaMa or OLMo, though technically speaking they share a basic architecture, don't actually fill the same role. There's been a deliberate confusion of these two things, ...

- AI2’s open-source OLMo model gets a more diversified dataset, two-stage curriculumon April 17, 2024 at 7:45 pm

AI2 has released an update for its open source language model OLMo 1.7-7B with a new Dolma 1.7 dataset and two-stage training curriculum.

- Star Trek's Holodeck recreated using ChatGPT and video game assetson April 10, 2024 at 5:00 pm

Joshi Assistant Professor in CIS, along with collaborators at Stanford, the University of Washington, and the Allen Institute for Artificial Intelligence (AI2). Named for its Star Trek forebear ...

- Randolph Community College gets $8.5M from county towards planned $18M capital investmentson April 10, 2024 at 11:50 am

I am planning to secure in my first year and invest in the next 24 months,” said RCC President Shah Ardalan. Here's how the college will spend the first $8.5 million.

- How AI2 Programs in France Are Shaping Tomorrow's Tech Leaderson April 7, 2024 at 6:19 am

Enter AI2 Institute Of Artificial Intelligence, a pioneering institution nestled in the heart of France, dedicated to sculpting the future tech leaders in artificial intelligence. With a curriculum ...

- Astec Industries Inc AI2on April 5, 2024 at 8:04 am

Morningstar Quantitative Ratings for Stocks are generated using an algorithm that compares companies that are not under analyst coverage to peer companies that do receive analyst-driven ratings ...

- Seattle’s AI2 Incubator launches online forum for spotlighting research and discussing AI advanceson April 4, 2024 at 5:07 am

Seattle’s AI2 Incubator launched a new online forum called Harmonious for discussing research papers and advances related to artificial intelligence, saying the aim was to cut through the glut ...

- AI2 Incubator spinout Modulus sells cell therapy tech assets to Boston’s Ginkgo Bioworkson April 2, 2024 at 9:44 am

The news: Modulus Therapeutics, a Seattle-based cellular therapy company spun out of the Allen Institute of Artificial Intelligence (AI2) Incubator, has sold its cell therapy platform assets to ...

via Bing News