While a great deal of research has gone into training computer models to infer someone’s emotional state based on their facial expression, that is not the most important aspect of human emotional intelligence, says MIT Professor Rebecca Saxe. Much more important is the ability to predict someone’s emotional response to events before they occur.

Image: Christine Daniloff, MIT

Using insights into how people intuit others’ emotions, researchers have designed a model that approximates this aspect of human social intelligence.

When interacting with another person, you likely spend part of your time trying to anticipate how they will feel about what you’re saying or doing. This task requires a cognitive skill called theory of mind, which helps us to infer other people’s beliefs, desires, intentions, and emotions.

MIT neuroscientists have now designed a computational model that can predict other people’s emotions — including joy, gratitude, confusion, regret, and embarrassment — approximating human observers’ social intelligence. The model was designed to predict the emotions of people involved in a situation based on the prisoner’s dilemma, a classic game theory scenario in which two people must decide whether to cooperate with their partner or betray them.

To build the model, the researchers incorporated several factors that have been hypothesized to influence people’s emotional reactions, including that person’s desires, their expectations in a particular situation, and whether anyone was watching their actions.

“These are very common, basic intuitions, and what we said is, we can take that very basic grammar and make a model that will learn to predict emotions from those features,” says Rebecca Saxe, the John W. Jarve Professor of Brain and Cognitive Sciences, a member of MIT’s McGovern Institute for Brain Research, and the senior author of the study.

Sean Dae Houlihan PhD ’22, a postdoc at the Neukom Institute for Computational Science at Dartmouth College, is the lead author of the paper, which appears today in Philosophical Transactions A. Other authors include Max Kleiman-Weiner PhD ’18, a postdoc at MIT and Harvard University; Luke Hewitt PhD ’22, a visiting scholar at Stanford University; and Joshua Tenenbaum, a professor of computational cognitive science at MIT and a member of the Center for Brains, Minds, and Machines and MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

Predicting emotions

While a great deal of research has gone into training computer models to infer someone’s emotional state based on their facial expression, that is not the most important aspect of human emotional intelligence, Saxe says. Much more important is the ability to predict someone’s emotional response to events before they occur.

“The most important thing about what it is to understand other people’s emotions is to anticipate what other people will feel before the thing has happened,” she says. “If all of our emotional intelligence was reactive, that would be a catastrophe.”

To try to model how human observers make these predictions, the researchers used scenarios taken from a British game show called “Golden Balls.” On the show, contestants are paired up with a pot of $100,000 at stake. After negotiating with their partner, each contestant decides, secretly, whether to split the pool or try to steal it. If both decide to split, they each receive $50,000. If one splits and one steals, the stealer gets the entire pot. If both try to steal, no one gets anything.

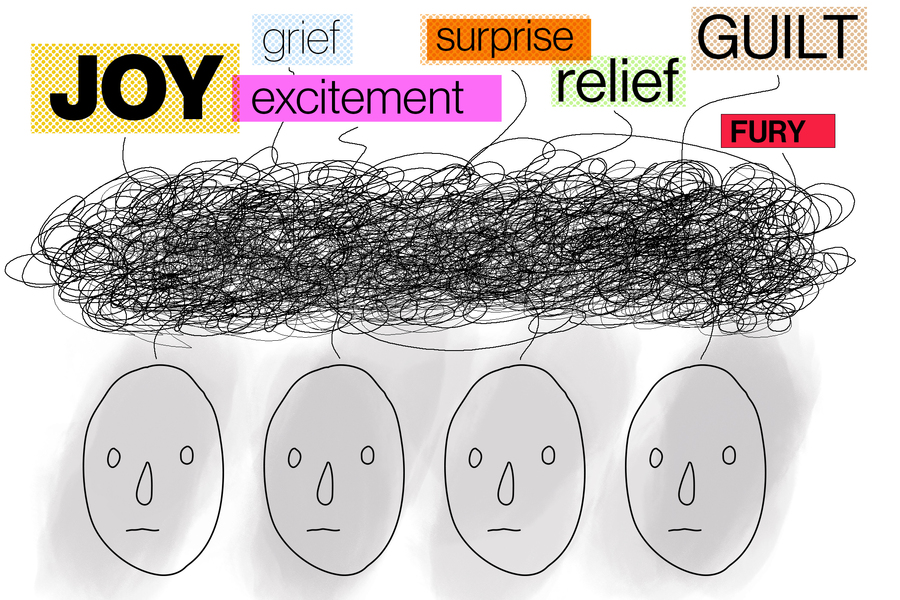

Depending on the outcome, contestants may experience a range of emotions — joy and relief if both contestants split, surprise and fury if one’s opponent steals the pot, and perhaps guilt mingled with excitement if one successfully steals.

To create a computational model that can predict these emotions, the researchers designed three separate modules. The first module is trained to infer a person’s preferences and beliefs based on their action, through a process called inverse planning.

“This is an idea that says if you see just a little bit of somebody’s behavior, you can probabilistically infer things about what they wanted and expected in that situation,” Saxe says.

Using this approach, the first module can predict contestants’ motivations based on their actions in the game. For example, if someone decides to split in an attempt to share the pot, it can be inferred that they also expected the other person to split. If someone decides to steal, they may have expected the other person to steal, and didn’t want to be cheated. Or, they may have expected the other person to split and decided to try to take advantage of them.

The model can also integrate knowledge about specific players, such as the contestant’s occupation, to help it infer the players’ most likely motivation.

The second module compares the outcome of the game with what each player wanted and expected to happen. Then, a third module predicts what emotions the contestants may be feeling, based on the outcome and what was known about their expectations. This third module was trained to predict emotions based on predictions from human observers about how contestants would feel after a particular outcome. The authors emphasize that this is a model of human social intelligence, designed to mimic how observers causally reason about each other’s emotions, not a model of how people actually feel.

“From the data, the model learns that what it means, for example, to feel a lot of joy in this situation, is to get what you wanted, to do it by being fair, and to do it without taking advantage,” Saxe says.

Core intuitions

Once the three modules were up and running, the researchers used them on a new dataset from the game show to determine how the models’ emotion predictions compared with the predictions made by human observers. This model performed much better at that task than any previous model of emotion prediction.

The model’s success stems from its incorporation of key factors that the human brain also uses when predicting how someone else will react to a given situation, Saxe says. Those include computations of how a person will evaluate and emotionally react to a situation, based on their desires and expectations, which relate to not only material gain but also how they are viewed by others.

“Our model has those core intuitions, that the mental states underlying emotion are about what you wanted, what you expected, what happened, and who saw. And what people want is not just stuff. They don’t just want money; they want to be fair, but also not to be the sucker, not to be cheated,” she says.

“The researchers have helped build a deeper understanding of how emotions contribute to determining our actions; and then, by flipping their model around, they explain how we can use people’s actions to infer their underlying emotions. This line of work helps us see emotions not just as ‘feelings’ but as playing a crucial, and subtle, role in human social behavior,” says Nick Chater, a professor of behavioral science at the University of Warwick, who was not involved in the study.

In future work, the researchers hope to adapt the model so that it can perform more general predictions based on situations other than the game-show scenario used in this study. They are also working on creating models that can predict what happened in the game based solely on the expression on the faces of the contestants after the results were announced.

Original Article: Computational model mimics humans’ ability to predict emotions

More from: Massachusetts Institute of Technology | Dartmouth College | Stanford University | MIT Computer Science and Artificial Intelligence Laboratory | McGovern Institute for Brain Research

The Latest Updates from Bing News

Go deeper with Bing News on:

AI predicting emotional response

- Bank CIO: We don't need AI whizzes, we need critical thinkers to challenge AI

A few days ago, an industry pundit delivered a scathing post against a leading IT consulting firm, stating that instead of retaining the services of this company for millions of dollars, simply use ...

- Key Scenarios to Use AI in Education

AI is becoming a big disruptor in education. By 2026, AI in education is expected to reach $10.38 billion, growing annually by 45.12%. AI EdTech startups are getting lots of money and users. AI is ...

- AI content creation: Ushering in the unimaginable

Embrace cutting-edge AI content creation tools to transform your marketing. Balance human input while harnessing machine efficiency. The post AI content creation: Ushering in the unimaginable appeared ...

- AI And Text Dominance: Navigating The Future Of Human Conversations

How AI powered chatbots affect human conversations and the dominance of chat for Generation Z. The future of communications ...

- AI unicorn Synthesia launches most ’emotionally expressive’ avatars on the market

Synthesia's latest AI avatars use sentiment analysis to identify and express the emotion contained within text ...

Go deeper with Bing News on:

AI predicting human social behavior

- 3 Ways AI Can Make Humans Better (and How it Can Hurt Us)

Evolving updates. AI continues to change and gain sophistication. If you deploy AI tools, you must ensure you're up-to-date with the most recent versions. This requires extra time, training, and ...

- From Personalization to Virtual Try-Ons: How Generative AI is Shaping E-commerce

In a world where online shopping has become the new norm, e-commerce businesses are constantly striving to enhance the customer experience. From personalized product recommendations to virtual try-ons ...

- The 4 Ways AI Can Help Boost Your Workplace Retention Efforts

So how can AI take this automation to the next level and help you select the candidates most likely to stick? By introducing “smart” automated processes that learn as they grow and more closely ...

- New AI Model can Predict People's Political Stance

A new study has revealed that AI can now effectively predict a person's political stance through facial recognition technology.

- The Need For AI-Powered Cybersecurity to Tackle AI-Driven Cyberattacks

Artificial intelligence can help security professionals counter the threats from cyberattacks that also are increasingly boosted by AI.