via Northeastern University

The researchers simulated a smart speaker interaction to test how altering peoples’ moods might influence the extent to which they trust autonomous products.

Stanford engineers investigated how people’s moods might affect their trust of autonomous products, such as smart speakers. They uncovered a complicated relationship.

While a certain level of trust is needed for autonomous cars and smart technologies to reach their full potential, these technologies are not infallible – hence why we’re supposed to keep our hands on the wheel of self-driving cars and follow traffic laws, even if they contradict our map app instructions. Recognizing the significance of trust in devices – and the dangers when there is too much of it – Erin MacDonald, assistant professor of mechanical engineering at Stanford University, researches whether products can be designed to encourage more appropriate levels of trust among consumers.

In a paper published last month in The Journal of Mechanical Design, MacDonald and Ting Liao, her former graduate student, examined how altering peoples’ moods influenced their trust in a smart speaker. Their results were so surprising, they conducted the experiment a second time and with more participants – but the results didn’t change.

“We definitely thought that if people were sad, they would be more suspicious of the speaker and if people were happy, they would be more trusting,” said MacDonald, who is a senior author of the paper. “It wasn’t even close to that simple.”

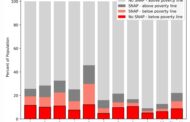

Overall, the experiments support the notion that a user’s opinion of how well technology performs is the biggest determining factor of whether or not they trust it. However, user trust also differed by age group, gender and education level. The most peculiar result was that, among the people who said the smart speaker met their expectations, participants trusted it more if the researchers had tried to put them in either a positive or a negative mood – participants in the neutral mood group did not exhibit this same elevation in trust.

“An important takeaway from this research is that negative emotions are not always bad for forming trust. We want to keep this in mind because trust is not always good,” said Liao, who is now an assistant professor at the Stevens Institute of Technology in New Jersey and lead author of the paper.

Manipulating trust

Scientific models of interpersonal trustworthiness suggest that our trust in other people can rely on our perceptions of their abilities, but that we also consider whether or not they are caring, objective, fair and honest, among many other characteristics. Beyond the qualities of who you are interacting with, it’s also been shown that our own personal qualities affect our trust in any situation. But when studying how people interact with technology, most research concentrates on the influence of the technology’s performance, overlooking trust and user perception.

In their new study, MacDonald and Liao decided to address this gap – by studying mood – because previous research has shown that emotional states can affect the perceptions that inform interpersonal trustworthiness, with negative moods generally reducing trust.

Over the span of two identical experiments, the researchers analyzed the interactions of sixty-three participants with a simulated smart speaker that consisted of a mic and pre-recorded answers hidden under a deactivated smart speaker. Before participants used the speaker, they were surveyed about their feelings regarding their trust of machines and shown images that, according to previous research, would put them in a good or a bad mood, or not alter their mood at all.

The participants asked the speaker 10 predetermined questions and received 10 prerecorded answers of varying accuracy or helpfulness. After each question, the participant would rate their satisfaction with the answer and report whether it met their expectations. At the end of the study, they described how much they trusted the speaker.

If participants didn’t think the speaker delivered satisfactory answers, none of the variables measured or manipulated in the experiment – including age, gender, education and mood – changed their minds. However, among participants who said the speaker lived up to their expectations, men and people with less education were more likely to trust the speaker, while people over age 65 were significantly less likely to trust the device. The biggest surprise for the researchers was that, in the group whose expectations were met, mood priming led to increased trust regardless of whether the researchers tried to put them in a good mood or a bad mood. The researchers did not follow up on why this happened, but they noted that existing theory suggests that people may become more tolerant or empathetic with a product when they are emotional.

“Product designers always try to make people happy when they’re interacting with a product,” said Liao. “This result is quite powerful because it suggests that we should not only focus on positive emotions but that there is a whole emotional spectrum that is worth studying.”

Proceed with caution

This research suggests there is a nuanced and complicated relationship between who we are and how we feel about technology. Parsing out the details will take further work, but the researchers emphasize that the issue of trust between humans and autonomous technologies deserves increased attention now more than ever.

“It bothers me that engineers pretend that they’re neutral to affecting people’s emotions and their decisions and judgments, but everything they design says, ‘Trust me, I’ve got this totally under control. I’m the most high-tech thing you’ve ever used,’?” said MacDonald. “We test cars for safety, so why should we not test autonomous cars to determine whether the driver has the right level of knowledge and trust in the vehicle to operate it?”

As an example of a feature that might better regulate user trust, MacDonald recalled the more visible warnings she saw on navigation apps during the Glass Fire that burned north of Stanford in fall, which instructed people to drive cautiously since the fire may have altered road conditions. Following their findings, the researchers would also like to see design-based solutions that factor in the influence of users’ moods, both good and bad.

“The ultimate goal is to see whether we can calibrate people’s emotions through design so that, if a product isn’t mature enough or if the environment is complicated, we can adjust their trust appropriately,” said Liao. “That is probably the future in five to 10 years.”

The Latest Updates from Bing News & Google News

Go deeper with Bing News on:

Trust of autonomous products

- NHTSA probes Tesla recall of 2 million vehicles over Autopilot

NHTSA is opening an investigation into Tesla's Autopilot recall due to concerns due to crash events after vehicles had the recall software update installed.

- The stark contrast between Meta and Tesla earnings reactions reveals the uncanny power of Musk's promises

Elon Musk managed to turn around Tesla's stock slide with a couple promises.

- Crypto for Advisors: The Professionalization of Crypto

The professionalization of crypto is here, whether it’s tokenized securities, crypto-forward financial products from the world’s largest asset managers or platforms that help financial advisors access ...

- Radical thinks the time has come for solar-powered, high-altitude autonomous aircraft

Though many eyes are on space as orbit develops into a thriving business ecosystem, Radical is keeping things a little closer to the ground — but not too close. Its high-altitude, solar-powered ...

- Stripe, doubling down on embedded finance, de-couples payments from the rest of its stack

That might sound like a lot of noise, but in truth, most of the list of new items is actually on the incremental side — updates and new features to bigger products already announced. “Our mission is ...

Go deeper with Google Headlines on:

Trust of autonomous products

[google_news title=”” keyword=”trust of autonomous products” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]

Go deeper with Bing News on:

Our relationship with autonomous technologies

- We should not make the same mistake with autonomous driving as we did with electric vehicles

Let's propel the automotive industry into a future where autonomy is not just a possibility but a reality, Henrik Green writes. View on euronews ...

- Perx Technologies Elevates Loyalty with Perx Orion™: The AI-Driven Customer Intelligence Solution Transforming Engagement and Brand Experiences

SINGAPORE , April 25, 2024 /PRNewswire/ -- Perx Technologies ... Relationships and impact are way beyond just B2C transaction-only touchpoints. It's about simplifying and unifying engagements while ...

- Unveiling Lumu Autopilot: An AI-Powered, Autonomous Cybersecurity Incident Response and Management Technology

Autopilot is a patent-pending technology built to help ... around the clock regardless of where your team is." The benefits of Autopilot include: Autonomous Incident Management: To take action ...

- Walmart Expands Robotic Workforce with Autonomous Electric Forklifts

"As the leader in autonomous trailer loading and unloading, Fox Robotics is pleased to deepen its relationship with Walmart as a key customer and investor. We see this collaboration as the latest ...

- Simulators Track our Changing Relationship with Technology

Simulation-based training has its whole-of-ship/whole-of-team scenarios, but zooming in, the industry is now working on more ...

Go deeper with Google Headlines on:

Our relationship with autonomous technologies

[google_news title=”” keyword=”our relationship with autonomous technologies” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]