Google’s experiment shows that having more processing power and more data makes a difference

Working at the secretive Google X lab, researchers from Google and Stanford connected 1,000 computers, turned them loose on 10 million YouTube stills for three days, and watched as they learned to identify cat faces.

The research, thus summarized, is good for a laugh. “Perhaps this is not precisely what Turing had in mind,” wrote The Atlantic’s Alexis Madrigal. Sure it was, countered The Cato Institute’s Julian Sanchez: Google was training its computers to pass the “Purring Test.”

But what’s most fascinating about the study is that the researchers didn’t actually tell the computers to look for cat faces. The machines started doing that on their own.

The paper’s actual title, you see, has nothing to do with felines, or YouTube for that matter. It’s called “Building High-level Features Using Large Scale Unsupervised Learning,” and what it’s really about is the ability of computer networks to learn what’s meaningful in images—without humans’ help.

When an untutored computer looks at an image, all it sees are thousands of pixels of various colors. With practice and supervision, it can be trained to home in on certain features—say, those that tend to indicate the presence of a human face in a photo—and reliably identify them when they appear. But such training typically requires images that are labeled, so that the computer can tell whether it guessed right or wrong and refine its concept of a human face accordingly. That’s called supervised learning.

The problem is that most data in the real world doesn’t come in such neat categories. So in this study, the YouTube stills were unlabeled, and the computers weren’t told what they were supposed to be looking for. They had to teach themselves what parts of any given photo might be relevant based solely on patterns in the data. That’s called unsupervised learning.

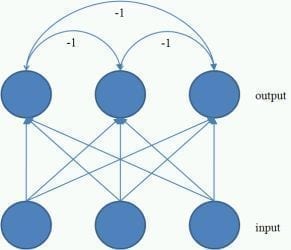

They were to develop these concepts using artificial neural networks—a system of distributed information processing analogous to that of the human brain. The goal was to see if Google’s computers could mimic some of the functionality of humans’ visual cortex, which has evolved to be expert at recognizing the patterns that matter most to us (such as faces and facial expressions).

In fact, Google’s machines did home in on human faces as one of the more relevant features in the data set. They also developed the concepts of cat faces and human bodies—not because they were instructed to, but merely because the arrangement of pixels in image after image suggested that those features might be in some way important.

Google engineering ace Jeff Dean, who helped oversee the project, tells me he was surprised by how well the network accomplished this. In past unsupervised learning tests, machines have managed to attach importance to lower-level features like the edges of an object, but not more abstract features like faces (or cats).

via Slate – Will Oremus

The Latest Streaming News: Artificial Intelligence Breakthrough updated minute-by-minute