The result of the change to the basic algorithm is a speed up of around 20,000 times, which is astounding.

Google Research has just released details of a Machine Vision technique which might bring high power visual recognition to simple desktop and even mobile computers. It claims to be able to recognize 100,000 different types of object within a photo in a few minutes – and there isn’t a deep neural network DNN mentioned.

There has always been a basic split in machine vision work. The engineering approach tries to solve the problem by treating it as a signal detection task using standard engineering techniques. The more “soft” approach has been to try to build systems that are more like the way humans do things. Recently it has been this human approach that seems to have been on top, with DNNs managing to learn to recognize important features in sample videos. This is very impressive and very important, but as is often the case the engineering approach also has a trick or two up its sleeve.

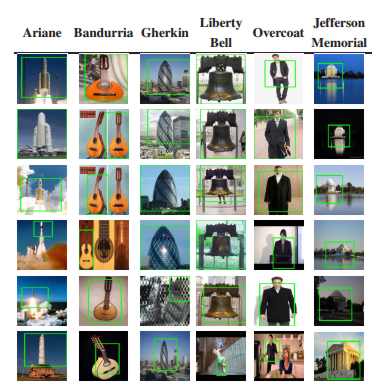

In this case we have improvements to the fairly standard technique of applying convolutional filters to an image to pick out objects of interest. The big problem with convolutional filters is that you need at least one per object type you are looking for – there has to be a cat filter, a dog filter, a human filter and so on. Given that the time it takes to apply a filter doesn’t scale well with image size, most approaches that use this method are limited to a small number of categories of object.

This year’s winner of the CVPR Best Paper Award, co-authored by Googlers Tom Dean, Mark Ruzon, Mark Segal, Jonathon Shlens, Sudheendra Vijayanarasimhan and Jay Yagnik, describes technology that speeds things up so that many thousands of object categories can be used and the results can be produced in a few minutes with a standard computer.

The technique is complicated, but in essence it makes use of hashing to avoid having to compute everything each time. Locality sensitive hashing is use to lookup the results of each step of the convolution – that is, instead of applying a mask to the pixels and summing the result, the pixels are hashed and then used as a lookup in a table of results. They also use a rank ordering method which indicates which filter is likely to be the best match for further evaluation. The use of ordinal convolution to replace linear convolution seems to be as important as the use of hashing.

The result of the change to the basic algorithm is a speed up of around 20,000 times, which is astounding.

The Latest Bing News on:

Machine Vision Breakthrough

- Zebra Technologies Recognized for Healthcare Technology Innovation in 8th Annual MedTech Breakthrough Awards Programon May 9, 2024 at 7:00 am

Awards Program Recognizes Standout Digital Health & Medical Technology Products and CompaniesLOS ANGELES, May 09, 2024 (GLOBE NEWSWIRE) -- MedTech Breakthrough, an independent market intelligence ...

- Powering the future through transformative techon May 8, 2024 at 10:47 am

Victoria is passionate about driving high-impact vision, strategy, and operations to transform complex innovation ecosystems at scale, and serving as a trusted partner for entrepreneurs with ...

- Breakthrough paves the way for next generation of vision implantson May 8, 2024 at 7:13 am

A group of researchers have created an exceptionally small implant, with electrodes the size of a single neuron that can also remain intact in the body over time -- a unique combination that holds ...

- Unveiling Vaaman: A Breakthrough in Edge Computing By Indian Startup Vicharakon April 24, 2024 at 9:53 am

In conclusion, Vaaman represents a breakthrough in reconfigurable computing ... Autonoous Vehicle, IoT, Machine Vision, Edge Gateways like industries. As edge computing continues to gain momentum ...

- On the trail of deepfakes, researchers identify 'fingerprints' of AI-generated videoon April 23, 2024 at 5:00 pm

According to new research, current methods for detecting manipulated digital media will not be effective against AI-generated video; but a machine-learning approach could be the key to unmasking these ...

- Breakthrough Magnet School Northon April 22, 2024 at 4:59 pm

Breakthrough Magnet School North is a magnet school located in Hartford, CT, which is in a mid-size city setting. The student population of Breakthrough Magnet School North is 336 and the school ...

- Westmead Hospital makes breakthrough with new $500,000 laser machine for brain surgerieson April 17, 2024 at 1:20 pm

Dr Dexter said the new procedure, which was backed by a $500,000 laser machine donated by construction ... surgical innovation as an “incredible breakthrough”. “I’m pleased to see another ...

- Science Takes Center Stage at the 2024 Breakthrough Prize Ceremonyon April 12, 2024 at 9:38 am

By Eda Yu This story was created in partnership with The Breakthrough Prize ... Jumper for designing the revolutionary AlphaFold—a machine learning system that accurately predicts the structure ...

- Alzheimer's Breakthrough as 'Protective' Gene May Open Up New Treatmentson April 10, 2024 at 8:17 am

Scientists have discovered a genetic variant that could reduce the odds of developing Alzheimer's disease by up to 70 percent. By learning more about this protective variant, the researchers hope ...

The Latest Google Headlines on:

Machine Vision Breakthrough

[google_news title=”” keyword=”Machine Vision Breakthrough” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

The Latest Bing News on:

Machine vision

- Elementary Gets Funding For AI Visionon May 8, 2024 at 6:50 am

Los Angeles-based Elementary, a developer of AI-powered machine vision software, says it has received a round of strategic investment from Rockwell Automation. Size of that funding was not disclosed.

- Elementary Secures Strategic Investment from Rockwell Automation to Drive Adoption of AI Machine Vision for Manufacturingon May 8, 2024 at 6:00 am

Elementary, an innovator in AI-powered machine vision, proudly announces a significant milestone in its mission to redefine manufacturing automation with its AI-powered vision inspection. Rockwell ...

- Machine vision: MVTec presents itself and its software products at Automateon May 7, 2024 at 8:31 am

MVTec Software GmbH, an international manufacturer of machine vision software, will be exhibiting again this year at Automate 2024 in Chicago, Illinois, from May 6 to May 9, 2024. The software company ...

- New Harvard Technology Paves the Way for Advanced Machine Visionon May 6, 2024 at 3:21 pm

Scientists have developed a compact, single-shot, and complete polarization imaging system using metasurfaces. Think of all the information we get based on how an object interacts with wavelengths of ...

- Omnivision debuts machine vision unit, three new sensorson May 6, 2024 at 1:31 pm

Omnivision unveils its Machine Vision Unit and three new CMOS global-shutter image sensors at Automate 2024.

- Vision AI starter kit adds event-based sensoron May 6, 2024 at 7:20 am

Prophesee announces its event-based Metavision HD sensor and AI is available for the AMD Kria KV260 vision AI starter kit.

- UVeye And The Future Of Machine Vision In Supply Chainon May 2, 2024 at 4:56 am

The fact that this story made the editor’s cut alongside local traffic and weather says a lot about the maturity of AI and machine vision. It’s here to stay, and it’s amazing. In 2016 at an SCM World ...

- OPT Machine Vision Tech A is about to announce its earnings — here's what to expecton April 30, 2024 at 2:01 am

On April 30, OPT Machine Vision Tech A is reporting earnings from the last quarter. Analysts expect OPT Machine Vision Tech A will release earnings per share of CNY 0.030. Follow OPT Machine Vision ...

- Machine Vision System Market Size, Latest Trends, Share, Key Players, Revenue, Opportunity, and Forecast 2024 to 2032on April 28, 2024 at 2:59 pm

Report Ocean has recently unveiled its research findings on the “Machine Vision System Market” spanning from 2024 to 2032. This comprehensive report thoroughly analyzes the market dynamics, including ...

- 3D Machine Vision Market Art of Consumer Observation Harnessing Ethnography Techniques for Insightson April 25, 2024 at 4:40 pm

Request To Download Free Sample of This Strategic Report @- 3D Machine Vision Market is valued approximately USD 1.5 billion in 2019 and is anticipated to grow with a healthy growth rate of more than ...

The Latest Google Headlines on:

Machine vision

[google_news title=”” keyword=”machine vision” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]