New machine learning method developed by researchers at the University of Helsinki, Aalto University and Waseda University of Tokyo can use for example data on cell phones while guaranteeing data subject privacy.

Modern AI is based on machine learning which creates models by learning from data. Data used in many applications such as health and human behaviour is private and needs protection. New privacy-aware machine learning methods have been developed recently based on the concept of differential privacy. They guarantee that the published model or result can reveal only limited information on each data subject.

Privacy-aware machine learning

“Previously you needed one party with unrestricted access to all the data. Our new method enables learning accurate models for example using data on user devices without the need to reveal private information to any outsider”, Assistant Professor Antti Honkela of the University of Helsinki says.

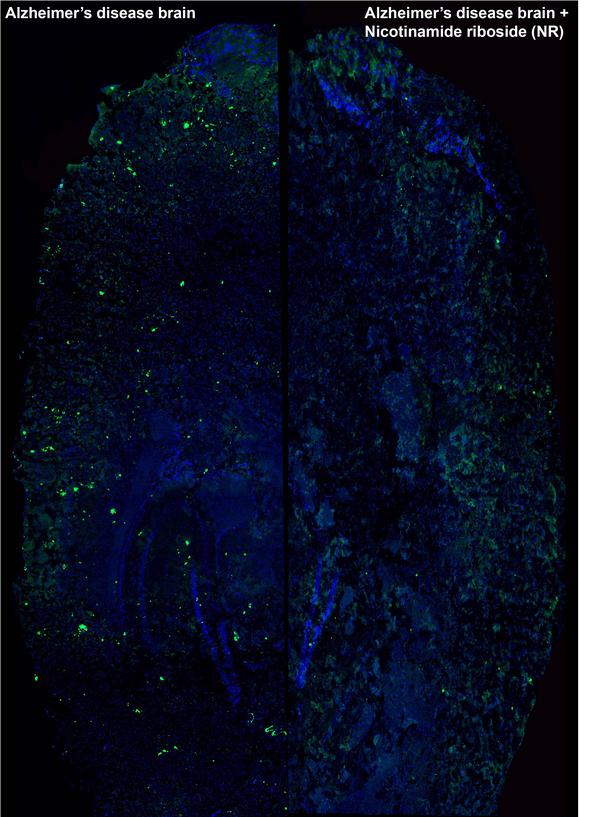

The group of researchers at the University of Helsinki and Aalto University, Finland, has applied privacy-aware methods for example to predicting cancer drug efficacy using gene expression.

“We have developed these methods with funding from the Academy of Finland for a few years, and now things are starting to look promising. Learning from big data is easier, but now we can also get results from smaller data”, Academy Professor Samuel Kaski of Aalto University says.

Learn more: New AI method keeps data private

The Latest on: Differential privacy

[google_news title=”” keyword=”differential privacy” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]- How to Become a Data Scientist in 2024: A 5-Step Guide to Get the Jobon April 23, 2024 at 6:58 am

Learn how to become a data scientist, including the required education, skills, and experiences needed to get the job and a high data scientist salary.

- Google Privacy Sandbox and What Brands Need to Knowon April 23, 2024 at 5:49 am

How Google Privacy Sandbox is a privacy-first alternative, and how it fits into the future of cookieless marketing and advertising.

- Are Data Privacy And Generative AI Mutually Exclusive?on April 22, 2024 at 5:44 am

DP offers benefits such as enhanced privacy, regulatory compliance and customer trust, enabling companies to innovate and compete while protecting sensitive data. Its quantifiable privacy guarantees ...

- Need to Know: What’s an identity graph and why do marketers need them?on April 19, 2024 at 11:43 am

For consistent, comprehensive and comparable audience measurement across platforms, marketers need a robust ID system with an identity graph designed specifically for measurement.

- Mobile Targeting And Privacy Strategies For App Devs And Advertiserson April 16, 2024 at 5:15 am

By leveraging smart strategies and technology tools, app creators and marketers can reach and engage target audiences while complying with applicable regulations.

- PVML combines an AI-centric data access and analysis platform with differential privacyon April 15, 2024 at 11:17 am

PVML is offering an interesting solution by combining a ChatGPT-like tool for analyzing data with the safety guarantees of differential privacy. Using retrieval-augmented generation (RAG), PVML ca ...

- PVML combines an AI-centric data access and analysis platform with differential privacyon April 15, 2024 at 11:17 am

PVML is offering an interesting solution by combining a ChatGPT-like tool for analyzing data with the safety guarantees of differential privacy. The company was founded by husband-and-wife team ...

- PVML raises $8M to secure access to sensitive data with Differential Privacy techon April 10, 2024 at 7:00 am

PVML Ltd., an Israeli startup that’s using artificial intelligence technology to enhance access to organizations’ most sensitive data, said today it has closed on an $8 million seed funding round.

- New Memory Architecture For Local Differential Privacy in Hardwareon April 1, 2024 at 5:00 pm

A technical paper titled “Two Birds with One Stone: Differential Privacy by Low-power SRAM Memory” was published by researchers at North Carolina State University ...

- COMP_SCI 396: Differential Privacy: from Foundations to Machine Learningon April 21, 2023 at 8:41 am

The course will be a proof-based theoretical course and assume sufficient mathematical maturity, in particular in probability and linear algebra. Important concepts will be reviewed. A good ...

via Google News and Bing News