Studies suggest that computer models called neural networks may learn to recognize patterns in data using the same algorithms as the human brain

The brain performs its canonical task — learning — by tweaking its myriad connections according to a secret set of rules. To unlock these secrets, scientists 30 years ago began developing computer models that try to replicate the learning process. Now, a growing number of experiments are revealing that these models behave strikingly similar to actual brains when performing certain tasks. Researchers say the similarities suggest a basic correspondence between the brains’ and computers’ underlying learning algorithms.

The algorithm used by a computer model called the Boltzmann machine, invented by Geoffrey Hinton and Terry Sejnowski in 1983, appears particularly promising as a simple theoretical explanation of a number of brain processes, including development, memory formation, object and sound recognition, and the sleep-wake cycle.

“It’s the best possibility we really have for understanding the brain at present,” said Sue Becker, a professor of psychology, neuroscience, and behavior at McMaster University in Hamilton, Ontario. “I don’t know of a model that explains a wider range of phenomena in terms of learning and the structure of the brain.”

Hinton, a pioneer in the field of artificial intelligence, has always wanted to understand the rules governing when the brain beefs a connection up and when it whittles one down — in short, the algorithm for how we learn. “It seemed to me if you want to understand something, you need to be able to build one,” he said. Following the reductionist approach of physics, his plan was to construct simple computer models of the brain that employed a variety of learning algorithms and “see which ones work,” said Hinton, who splits his time between the University of Toronto, where he is a professor of computer science, and Google.

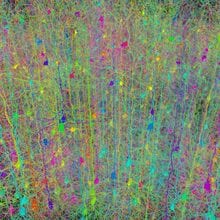

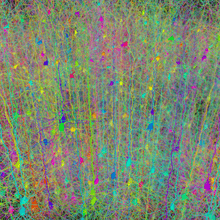

During the 1980s and 1990s, Hinton — the great-great-grandson of the 19th-century logician George Boole, whose work is the foundation of modern computer science — invented or co-invented a collection of machine learning algorithms. The algorithms, which tell computers how to learn from data, are used in computer models called artificial neural networks — webs of interconnected virtual neurons that transmit signals to their neighbors by switching on and off, or “firing.” When data are fed into the network, setting off a cascade of firing activity, the algorithm determines based on the firing patterns whether to increase or decrease the weight of the connection, or synapse, between each pair of neurons.

The Latest Bing News on:

Artificial neural networks

- AI Efficiency Breakthrough: How Sound Waves Are Revolutionizing Optical Neural Networkson April 23, 2024 at 12:34 pm

Researchers have developed a way to use sound waves in optical neural networks, enhancing their ability to process data with high speed and energy efficiency. Optical neural networks may provide the ...

- How Neural Concept’s aerodynamic AI is shaping Formula 1on April 14, 2024 at 8:00 am

It's a long way from pedal bikes to Formula 1. But that's precisely the quantum leap that AI-based startup Neural Concept and its co-founder and CEO, ...

- Artificial Intelligence ‘Explainability’ Is Overratedon April 13, 2024 at 10:16 am

As AI becomes more advanced and is applied to domains like healthcare, hiring, and criminal justice, some are calling for these systems to be more "explainable." ...

- Making a Difference with Adaptive Neural Networkson April 12, 2024 at 3:41 am

Imagine a world where tedious tasks become obsolete, replaced by the silent efficiency of a machine learning master. This vision drives Konstantine Morosheen, CEO of Algorithmic Lab, a man on a ...

- Artificial Intelligence Is Shattering Our Framework: A Talk With Aleksandra Przegalińskaon April 3, 2024 at 3:37 pm

In the future, will we read books written by robots? Aleksandra Przegalińska, a philosopher, futurologist, and researcher in the field of artificial intelligence, discusses the ways in which AI is ...

- Classical optical neural network exhibits 'quantum speedup'on April 1, 2024 at 5:00 pm

In recent years, artificial intelligence technologies ... great efforts have been made in two research directions: optical neural networks and quantum neural networks. Optical neural networks ...

- Artificial General Intelligence Or AGI: A Very Short Historyon March 29, 2024 at 6:00 am

These have been called since the 1950s “artificial neural networks,” algorithms that have been presented throughout the years with no empirical evidence as “mimicking the brain.” ...

- Training artificial neural networks to process images from a child's perspectiveon March 20, 2024 at 7:47 am

Researchers at New York University recently set out to explore the possibility of training artificial neural networks on these models without domain-specific inductive biases. Their paper ...

- What are artificial neural networks?on February 19, 2024 at 6:53 pm

Artificial neural networks are inspired by the early models of sensory processing by the brain. An artificial neural network can be created by simulating a network of model neurons in a computer.

- Liquid Neural Networks Do More With Lesson April 30, 2023 at 6:09 pm

[Ramin Hasani] and colleague [Mathias Lechner] have been working with a new type of Artificial Neural Network called Liquid Neural Networks, and presented some of the exciting results at a recent ...

The Latest Google Headlines on:

Artificial neural networks

[google_news title=”” keyword=”artificial neural networks” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

The Latest Bing News on:

Machine learning

- Machine learning-powered robot streamlines genetic research processon April 26, 2024 at 9:26 pm

University of Minnesota Twin Cities researchers have constructed a robot that uses machine learning to fully automate a complicated microinjection process used in genetic research.

- Automated machine learning robot unlocks new potential for genetics researchon April 26, 2024 at 9:10 am

University of Minnesota Twin Cities researchers have constructed a robot that uses machine learning to fully automate a complicated microinjection process used in genetic research.

- 3 Machine Learning Stocks That Could Be Multibaggers in the Making: April Editionon April 26, 2024 at 7:00 am

After a calamitous week, United States equities seem to be back on the rise. As of the end of equities trading on Tuesday, S&P 500 has risen 2.1% for the current week, while the tech-heavy Nasdaq has ...

- 3 Machine Learning Stocks That Could Triple Your Money by 2030on April 26, 2024 at 3:58 am

InvestorPlace - Stock Market News, Stock Advice & Trading Tips Investing in machine learning (ML) stocks presents an enticing opportunity for ...

- The Top 3 Machine Learning Stocks to Buy in April 2024on April 26, 2024 at 3:15 am

InvestorPlace - Stock Market News, Stock Advice & Trading Tips The U.S. economy is poised for remarkable growth driven by advancements in ...

- Machine learning model predicts CIS to MS conversion risk: Studyon April 25, 2024 at 10:00 pm

A machine learning model can predict the risk of converting from clinically isolated syndrome (CIS) to multiple sclerosis (MS), per a study.

- Machine learning and extended reality used to train welderson April 25, 2024 at 10:30 am

Ever since the ancient Egyptians hammered two pieces of gold together until they fused, the art of welding has continuously progressed.

- JFrog unveils MLflow integration to enhance machine learning model managementon April 25, 2024 at 6:15 am

Software supply chain company JFrog Ltd. today announced a new machine learning lifecycle integration between JFrog Artifactory and MLflow, an open-source software platform origin ...

- Machine learning and experimenton April 25, 2024 at 5:31 am

For more than 20 years in experimental particle physics and astrophysics, machine learning has been accelerating the pace of science, helping scientists tackle problems of greater and greater ...

- An AI Ethics Researcher's Take On The Future Of Machine Learning In The Art Worldon April 22, 2024 at 9:30 am

Delving into the controversial role of AI in art creation, SlashGear spoke to AI Ethics Researcher Wes Rahman about the debate over AI-generated images.

The Latest Google Headlines on:

Machine learning

[google_news title=”” keyword=”machine learning” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]