From Apple’s Siri to Honda’s robot Asimo, machines seem to be getting better and better at communicating with humans.

But some neuroscientists caution that today’s computers will never truly understand what we’re saying because they do not take into account the context of a conversation the way people do.

Specifically, say University of California, Berkeley, postdoctoral fellow Arjen Stolk and his Dutch colleagues, machines don’t develop a shared understanding of the people, place and situation – often including a long social history – that is key to human communication. Without such common ground, a computer cannot help but be confused.

“People tend to think of communication as an exchange of linguistic signs or gestures, forgetting that much of communication is about the social context, about who you are communicating with,” Stolk said.

The word “bank,” for example, would be interpreted one way if you’re holding a credit card but a different way if you’re holding a fishing pole. Without context, making a “V” with two fingers could mean victory, the number two, or “these are the two fingers I broke.”

“All these subtleties are quite crucial to understanding one another,” Stolk said, perhaps more so than the words and signals that computers and many neuroscientists focus on as the key to communication. “In fact, we can understand one another without language, without words and signs that already have a shared meaning.”

Babies and parents, not to mention strangers lacking a common language, communicate effectively all the time, based solely on gestures and a shared context they build up over even a short time.

Stolk argues that scientists and engineers should focus more on the contextual aspects of mutual understanding, basing his argument on experimental evidence from brain scans that humans achieve nonverbal mutual understanding using unique computational and neural mechanisms. Some of the studies Stolk has conducted suggest that a breakdown in mutual understanding is behind social disorders such as autism.

“This shift in understanding how people communicate without any need for language provides a new theoretical and empirical foundation for understanding normal social communication, and provides a new window into understanding and treating disorders of social communication in neurological and neurodevelopmental disorders,” said Dr. Robert Knight, a UC Berkeley professor of psychology in the campus’s Helen Wills Neuroscience Institute and a professor of neurology and neurosurgery at UCSF.

Stolk and his colleagues discuss the importance of conceptual alignment for mutual understanding in an opinion piece appearing Jan. 11 in the journal Trends in Cognitive Sciences.

Brain scans pinpoint site for ‘meeting of minds’

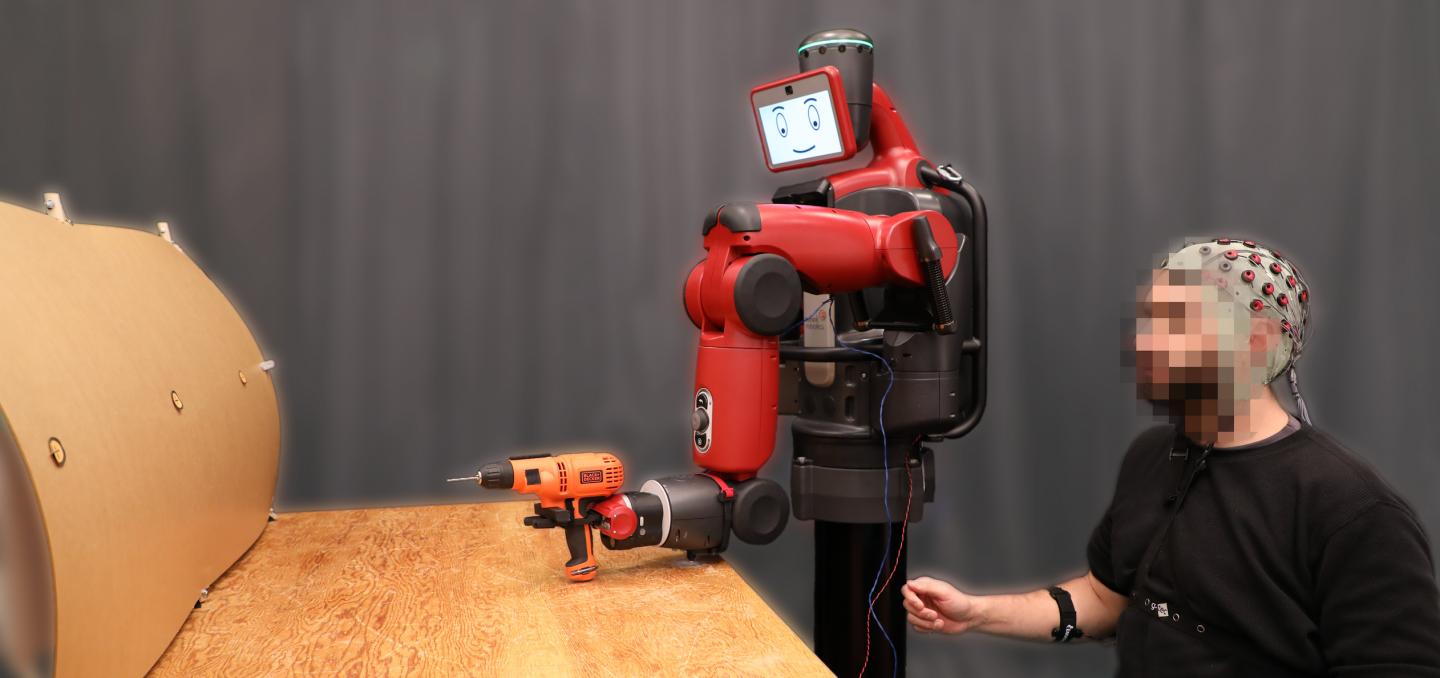

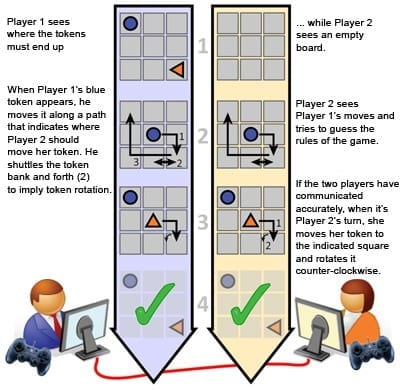

To explore how brains achieve mutual understanding, Stolk created a game that requires two players to communicate the rules to each other solely by game movements, without talking or even seeing one another, eliminating the influence of language or gesture. He then placed both players in an fMRI (functional magnetic resonance imager) and scanned their brains as they nonverbally communicated with one another via computer.

He found that the same regions of the brain – located in the poorly understood right temporal lobe, just above the ear – became active in both players during attempts to communicate the rules of the game. Critically, the superior temporal gyrus of the right temporal lobe maintained a steady, baseline activity throughout the game but became more active when one player suddenly understood what the other player was trying to communicate. The brain’s right hemisphere is more involved in abstract thought and social interactions than the left hemisphere.

“These regions in the right temporal lobe increase in activity the moment you establish a shared meaning for something, but not when you communicate a signal,” Stolk said. “The better the players got at understanding each other, the more active this region became.”

This means that both players are building a similar conceptual framework in the same area of the brain, constantly testing one another to make sure their concepts align, and updating only when new information changes that mutual understanding. The results were reported in 2014 in the Proceedings of the National Academy of Sciences.

“It is surprising,” said Stolk, “that for both the communicator, who has static input while she is planning her move, and the addressee, who is observing dynamic visual input during the game, the same region of the brain becomes more active over the course of the experiment as they improve their mutual understanding.”

Robots’ statistical reasoning

Robots and computers, on the other hand, converse based on a statistical analysis of a word’s meaning, Stolk said. If you usually use the word “bank” to mean a place to cash a check, then that will be the assumed meaning in a conversation, even when the conversation is about fishing.

Read more: Will computers ever truly understand what we’re saying?

The Latest on: Human computer understanding

[google_news title=”” keyword=”human computer understanding” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Human computer understanding

- How to Balance Human Creativity and Generative AI in Product Marketingon May 1, 2024 at 11:20 am

Generative artificial intelligence (GenAI) has been a hot-button topic in product marketing circles for several years. While some companies have embraced ...

- Finding Balance Between Tech Innovations and Human Touch in the Sports and Fitness Industryon April 29, 2024 at 5:00 pm

So, let's explore ways to strike a balance between technology and human interaction in the sports ... By analyzing an athlete's movements in detail, computer vision-based apps help in understanding ...

- Quantum challenge to be solved one mile undergroundon April 29, 2024 at 8:32 am

Radiation from space is a challenge for quantum computers as their computation time becomes limited by cosmic rays. Researchers from Chalmers University of Technology, Sweden, and University of ...

- Study explores why human-inspired machines can be perceived as eerieon April 25, 2024 at 4:40 am

Artificial intelligence (AI) algorithms and robots are becoming increasingly advanced, exhibiting capabilities that vaguely resemble those of humans. The growing similarities between AIs and humans ...

- LVMH Sees The AI Challenge For Luxury Is Not Technology But The Human Elementon April 24, 2024 at 4:00 am

LVMH is forging a path in artificial intelligence, especially generative AI (GenAI) that other luxury brands would be wise to follow.

- A new framework to generate human motions from language promptson April 23, 2024 at 3:40 am

Machine learning-based models that can autonomously generate various types of content have become increasingly advanced over the past few years. These frameworks have opened new possibilities for ...

- Video: World's first dogfight between AI and human piloton April 22, 2024 at 4:46 pm

An F-16 fighter plane running on artificial intelligence has engaged in the first-ever aerial dogfight exercise with a human-piloted counterpart. This is the first footage of the September mock fight, ...

- AI and the End of the Human Writeron April 22, 2024 at 3:09 am

Baron points out in her book Who Wrote This?, readers aren’t always able to tell if a slab of text came out of a human torturing herself over syntax or a machine’s frictionless innards. (William Blake ...

- MIT’s New AI Model Predicts Human Behavior With Uncanny Accuracyon April 19, 2024 at 8:43 am

A new technique can be used to predict the actions of human or AI agents who behave suboptimally while working toward unknown goals. MIT and other researchers developed a framework that models ...

- An Age-by-age Guide to Kids and AI, According to a Human Computer Science Teacheron April 17, 2024 at 10:00 am

Kids will encounter AI whether their guardians like it or not, so it's important to help them understand its benefits and limitations.

via Bing News