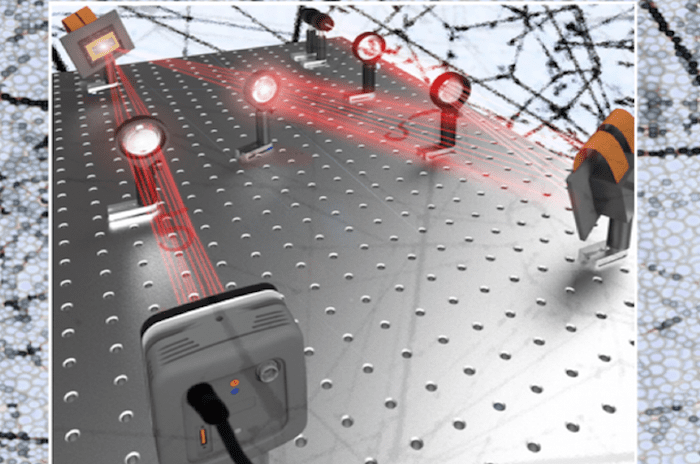

A massively parallel amplitude-only Fourier neural network

Researchers invent an optical convolutional neural network accelerator for machine learning

SUMMARY

Researchers at the George Washington University, together with researchers at the University of California, Los Angeles, and the deep-tech venture startup Optelligence LLC, have developed an optical convolutional neural network accelerator capable of processing large amounts of information, on the order of petabytes, per second. This innovation, which harnesses the massive parallelism of light, heralds a new era of optical signal processing for machine learning with numerous applications, including in self-driving cars, 5G networks, data-centers, biomedical diagnostics, data-security and more.

THE SITUATION

Global demand for machine learning hardware is dramatically outpacing current computing power supplies. State-of-the-art electronic hardware, such as graphics processing units and tensor processing unit accelerators, help mitigate this, but are intrinsically challenged by serial data processing that requires iterative data processing and encounters delays from wiring and circuit constraints. Optical alternatives to electronic hardware could help speed up machine learning processes by simplifying the way information is processed in a non-iterative way. However, photonic-based machine learning is typically limited by the number of components that can be placed on photonic integrated circuits, limiting the interconnectivity, while free-space spatial-light-modulators are restricted to slow programming speeds.

THE SOLUTION

To achieve a breakthrough in this optical machine learning system, the researchers replaced spatial light modulators with digital mirror-based technology, thus developing a system over 100 times faster. The non-iterative timing of this processor, in combination with rapid programmability and massive parallelization, enables this optical machine learning system to outperform even the top-of-the-line graphics processing units by over one order of magnitude, with room for further optimization beyond the initial prototype.

Unlike the current paradigm in electronic machine learning hardware that processes information sequentially, this processor uses the Fourier optics, a concept of frequency filtering which allows for performing the required convolutions of the neural network as much simpler element-wise multiplications using the digital mirror technology.

The Latest Updates from Bing News & Google News

Go deeper with Bing News on:

Machine intelligence

- ICMR-NIRRCH To Hold Workshop On Applications of Artificial Intelligence and Machine Learning in Disease Informatics, Details

New Delhi- The Indian Council of Medical Research (ICMR) and National Institute for Research in Reproductive and Child Health (NIRRCH) are going to organise a workshop on “Applications ...

- How Artificial Intelligence Is Changing The Way You Buy Travel Insurance

Artificial intelligence is transforming the way you buy travel insurance. Here are the surprising ways it could affect your next vacation.

- Fiverr Is My Top Artificial Intelligence (AI) Stock to Buy Now. Here's Why.

The freelance services marketplace's stock has taken a beating in the last three years, but the business just kept growing.

- 3 Machine Learning Stocks That Could Be Multibaggers in the Making: April Edition

InvestorPlace - Stock Market News, Stock Advice & Trading Tips After a calamitous week, United States equities seem to be back on the rise.

- Predictive AI And Slot Machines: Shaping The Future Of Casino Gaming

This article aims to demonstrate the extent to which predictive AI changed slot-machine design and to show the direction in which casino design is headed.

Go deeper with Google Headlines on:

Machine intelligence

[google_news title=”” keyword=”machine intelligence” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]

Go deeper with Bing News on:

Machine learning hardware

- 3 Machine Learning Stocks That Could Be Multibaggers in the Making: April Edition

After a calamitous week, United States equities seem to be back on the rise. As of the end of equities trading on Tuesday, S&P 500 has risen 2.1% for the current week, while the tech-heavy Nasdaq has ...

- Chip Giants Finalize Specs Baking Security Into Silicon

A consortium of top chip makers finalized the first version of Caliptra, a specification to add zero-trust security features directly inside silicon. The Caliptra 1.0 specification has hardware and ...

- Strategies for Democratizing GenAI

Powered by the AMD Instinct MI300X GPU accelerator, this new AI-focused server makes light work of large learning data sets and promotes open AI, open-source and democratic AI.

- Artificial Intelligence (AI) Hardware Market CAGR of 37.5%, Understanding Consumer Motivations, The Role of Ethnography Techniques

Artificial Intelligence (AI) Hardware Market is valued at approximately USD 9.8 billion in 2019 and is anticipated to grow with a healthy growth rate of more than 37.5% over the forecast period ...

- Machine learning and experiment

For more than 20 years in experimental particle physics and astrophysics, machine learning has been accelerating the pace of science, helping scientists tackle problems of greater and greater ...

Go deeper with Google Headlines on:

Machine learning hardware

[google_news title=”” keyword=”machine learning hardware” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]