Scientists like to think of science as self-correcting. To an alarming degree, it is not

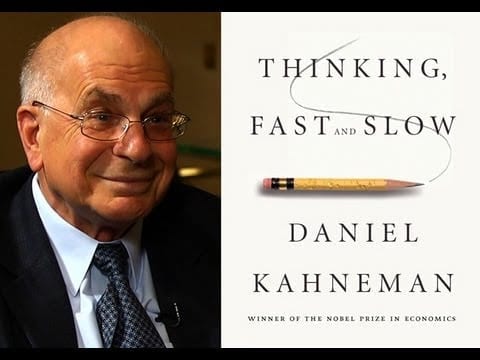

“I SEE a train wreck looming,” warned Daniel Kahneman, an eminent psychologist, in an open letter last year. The premonition concerned research on a phenomenon known as “priming”. Priming studies suggest that decisions can be influenced by apparently irrelevant actions or events that took place just before the cusp of choice. They have been a boom area in psychology over the past decade, and some of their insights have already made it out of the lab and into the toolkits of policy wonks keen on “nudging” the populace.

Dr Kahneman and a growing number of his colleagues fear that a lot of this priming research is poorly founded. Over the past few years various researchers have made systematic attempts to replicate some of the more widely cited priming experiments. Many of these replications have failed. In April, for instance, a paper in PLoS ONE, a journal, reported that nine separate experiments had not managed to reproduce the results of a famous study from 1998 purporting to show that thinking about a professor before taking an intelligence test leads to a higher score than imagining a football hooligan.

The idea that the same experiments always get the same results, no matter who performs them, is one of the cornerstones of science’s claim to objective truth. If a systematic campaign of replication does not lead to the same results, then either the original research is flawed (as the replicators claim) or the replications are (as many of the original researchers on priming contend). Either way, something is awry.

To err is all too common

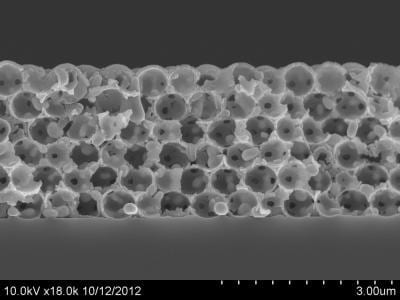

It is tempting to see the priming fracas as an isolated case in an area of science—psychology—easily marginalised as soft and wayward. But irreproducibility is much more widespread. A few years ago scientists at Amgen, an American drug company, tried to replicate 53 studies that they considered landmarks in the basic science of cancer, often co-operating closely with the original researchers to ensure that their experimental technique matched the one used first time round. According to a piece they wrote last year in Nature, a leading scientific journal, they were able to reproduce the original results in just six. Months earlier Florian Prinz and his colleagues at Bayer HealthCare, a German pharmaceutical giant, reported in Nature Reviews Drug Discovery, a sister journal, that they had successfully reproduced the published results in just a quarter of 67 seminal studies.

The governments of the OECD, a club of mostly rich countries, spent $59 billion on biomedical research in 2012, nearly double the figure in 2000. One of the justifications for this is that basic-science results provided by governments form the basis for private drug-development work. If companies cannot rely on academic research, that reasoning breaks down. When an official at America’s National Institutes of Health (NIH) reckons, despairingly, that researchers would find it hard to reproduce at least three-quarters of all published biomedical findings, the public part of the process seems to have failed.

Academic scientists readily acknowledge that they often get things wrong. But they also hold fast to the idea that these errors get corrected over time as other scientists try to take the work further. Evidence that many more dodgy results are published than are subsequently corrected or withdrawn calls that much-vaunted capacity for self-correction into question. There are errors in a lot more of the scientific papers being published, written about and acted on than anyone would normally suppose, or like to think.

Various factors contribute to the problem. Statistical mistakes are widespread. The peer reviewers who evaluate papers before journals commit to publishing them are much worse at spotting mistakes than they or others appreciate. Professional pressure, competition and ambition push scientists to publish more quickly than would be wise. A career structure which lays great stress on publishing copious papers exacerbates all these problems. “There is no cost to getting things wrong,” says Brian Nosek, a psychologist at the University of Virginia who has taken an interest in his discipline’s persistent errors. “The cost is not getting them published.”

First, the statistics, which if perhaps off-putting are quite crucial.

Go deeper with Bing News on:

Unreliable research

- 2008-2012 Honda Accord Used Market Pricing And Reliability

The 8th-gen Honda Accord model years ranging from 2008 to 2012 is a reliable midsize sedan with great used prices.

- Ubiros Gentle grippers go all electric for reliability, flexibility

Ubiros has developed grippers with force-sensing capabilities to bring automation to more picking tasks, explains President Onder Ondemir.

- Recent research on eyewitness memory may be Texas death row inmate's last hope

Charles Don Flores is on death row for a 1998 Farmers Branch murder. A professor says his and others' research on witness memory could prove Flores' innocence.

- China E-Commerce Exports, Unreliable Red Sea Shipping Boost Air Cargo

Resurgent air cargo demand and rising rates, powered by the quasi-blockade of Red Sea shipping and continued growth in bookings by Chinese e-commerce platforms, during a normally slow shipping period ...

- The bad and the ugly: AI is harmful, unreliable, and running out of data

Outperforming humans is one thing, but its rapid rise has meant that AI has created some problems for itself – and we're nervous.

Go deeper with Google Headlines on:

Unreliable research

[google_news title=”” keyword=”Unreliable research” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]

Go deeper with Bing News on:

Science as self-correcting

- US Space Force picks Rocket Lab for 2025 Victus Haze space domain awareness mission

"It's an honor to be selected by the Space Systems Command to partner in delivering the VICTUS HAZE mission and demonstrate the kind of advanced tactically responsive capabilities critical to evolving ...

- Review: Faking It; Artificial Intelligence in a Human World by Toby Walsh

Conveying complex ideas in accessible language, Faking It highlights the abilities and limits of machine and predictive intelligence ...

- Beth Linker Is Turning Good Posture on Its Head

A historian and sociologist of science re-examines the “posture panic” of the last century. You’ll want to sit down for this.

- To keep your teeth white, bright and healthy, follow these 7 tips from dental experts

Want the secrets to a whiter smile? Two dental experts share their best advice on how to avoid teeth discoloration and keep your smile looking bright and white.

- Guest Commentary: Mistakes and misconduct in science are not synonymous; there are remedies for both

Many Americans don’t believe in science. Scientists need to hold themselves to the highest standards to change minds.

Go deeper with Google Headlines on:

Science as self-correcting

[google_news title=”” keyword=”science as self-correcting” num_posts=”5″ blurb_length=”0″ show_thumb=”left”]