Forget voice control or gesture recognition: gadgets may soon link directly to our brains

Okay, great: we can control Our phones with speech recognition and our television sets with gesture recognition. But those technologies don’t work in all situations for all people. So I say, forget about those crude beginnings; what we really want is thoughtrecognition.

As I found out during research for a recent NOVA episode, it mostly appears that brain-computer interface (BCI) technology has not advanced very far just yet. For example, I tried to make a toy helicopter fly by thinking “up” as I wore a $300 commercial EEG headset. It barely worked.

Such “mind-reading” caps are quick to put on and noninvasive. They listen, through your scalp, for the incredibly weak remnants of electrical signals from your brain activity. But they’re lousy at figuring out where in your brain they originated. Furthermore, the headset software didn’t even know that I was thinking “up.” I could just as easily have thought “goofy” or “shoelace” or “pickle”—whatever I had thought about during the 15-second training session.

There are other noninvasive brain scanners—magnetoencephalography, positron-emission tomography and near-infrared spectroscopy, and so on—but each also has its trade-offs.

Of course, you can implant sensors inside someone’s skull for the best readings of all; immobilized patients have successfully manipulated computer cursors and robotic arms using this approach. Still, when it comes to controlling everyday electronics, brain surgery might be a tough sell.

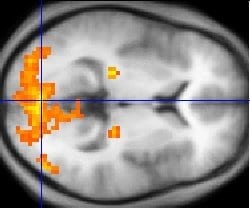

My most astonishing discovery came at Carnegie Mellon University, where Marcel Just and Tom Mitchell have been using real-time functional MRI scanners to do some actual mind reading—or thought recognition, as they more responsibly call it.

As I lay in the fMRI, I saw 20 images on the screen (of a strawberry, skyscraper, cave, and so on). I was instructed to imagine the qualities of each object. The computer would try to figure out, from every two objects, the sequence of the two images I had just seen (whether strawberry had come before skyscraper, for example). It got them 100 percent right.

via Scientific American – David Pogue

The Latest Streaming News: Mind-Machine Integration updated minute-by-minute

Bookmark this page and come back often

Latest NEWS

Latest VIDEO