Deep learning has created a resurgence of interest in neural networks and their application to everything from Internet search to self-driving cars. Results published in the scientific and technical literature show better-than-human accuracy on real-world tasks that include speech and facial recognition.

Fueled by modern massively parallel computing technology, it is now possible to train very complex multi-layer neural network architectures on large data sets to an acceptable degree of accuracy. This is referred to as deep-learning, as these multi-layer networks interpose many neuronal processing layers between the input data and the output results calculated by the neural network — hence the use of the word deep in the deep-learningcatchphrase. The resulting trained networks can be extremely valuable, as they have the ability to perform complex, real-world pattern recognition tasks very quickly on a variety of low-power devices including sensors, mobile phones, and FPGAs, as well as quickly and economically in the data center.

Generic applicability, high accuracy (sometimes better than human), and ability to be deployed nearly everywhere explains why scientists, technologists, entrepreneurs and companies are all scrambling to take advantage of deep-learning technology.

Machine learning went through a similar bandwagon stage in the 1980s where superlatives were lauded on the technology and futurists discussed how machine learning was going to change the world. The genesis of the 1980s machine- earning revolution was a seminal paper by Hopfield and Tank, “Neural Computation of Decisions in Optimization Problems,” which showed that good solutions to a wide class of combinatorial optimization problems could be found using networks of biology-inspired neurons. In particular, Hopfield and Tank demonstrated they could find good solutions to intractably large versions of the NP-Complete traveling salesman problem.

The advent of backpropagation by Rummelhart, Hinton and Williams allowed the adjustment of weights in a ‘neurone-like’ network so the network could be trained to solve a computational problem from example data. In particular, the ability of neural networks to adjust their weights to learn all the logic functions required to build a general purpose computer — including the non-linear XOR truth table — showed that artificial neural networks (ANNs) are computationally universal devices that can, in theory, be trained to solve any computable problem. I like to joke that machine learning made me one of the hardest working lazy men you would ever meet, as I was willing to work very hard to make the computer teach itself to solve a complex problem.

Nettalk, a beautiful example by Terry Sejnowski and Charles Rosenberg, showed that it was possible to teach a neural network to perform tasks at a human-level of complexity — specifically to read English text aloud. Even grade-school children immediately grasp the implications of machine learning through the NetTalk example, as people can literally hear the ANN learn to read aloud. Further, it is easy to show that the ANN had ‘learned’ a general solution to the problem of reading aloud, as it could correctly pronounce words that it had never seen before. I use NetTalk as a stellar example of how scientists can create simple and intuitively obvious examples to communicate their research to anyone.

The bandwagon faded for ANNs during the mid-1990s as overblown claims and a lull in the development of parallel computing exceeded both the patience of funding agencies and limited the size and complexity of the problems that could be addressed. Neural networks faded from the scientific limelight, while research continued to both expand and mature the technology. Still, examples such as Star Trek’s Commander Data preserved the popular perception of the potential of neural network technology.

The development of low-cost massively parallel devices like GPUs sparked a resurgence in the popularity of neural network research. Instead of spending $30M to purchase a 60 GF/s (billion flop/s) Connection Machine, modern researchers can now purchase a TF/s (trillion flop/s) capable GPU for around a hundred dollars. The parallel mapping pioneered by Farber on the Connection Machine at Los Alamos allows the computationally expensive training step to very efficiently map any SIMD architecture, be it a GPU or the vector architecture of an Intel Xeon or Intel Xeon Phi processor. Near-linear scalability in a distributed computing environment means that most computational clusters can achieve very efficient, near-peak performance during the training phase. For example, the 1980s mapping used on a Connection Machine was able to achieve over 13 PF/s (1015 flop/s) average sustained performance on the OakRidge National Laboratory Titan supercomputer. The ability to run efficiently on large numbers of either vector or GPU devices means that researchers can work with complex neural networks and large data sets to solve problems — sometimes as well or better than humans.

“The ability to run efficiently on large numbers of either vector or GPU devices means that researchers can work with complex neural networks and large data sets to solve problems — sometimes as well or better than humans.”

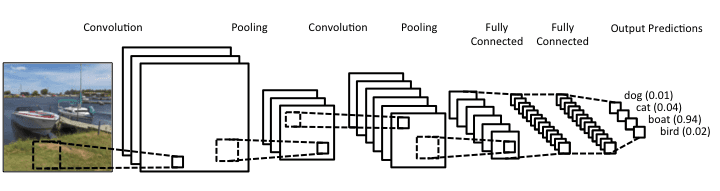

Convolutional neural networks (CNNs) are a form of ‘deep’ neural network architecture popularized by Yann LeCun and others in 1998. CNNs are behind many of the deep-learning successes that have been reported recently in image and speech recognition. Again inspired by biology, these neural networks find features in the data that permit correct classification or recognition of the training images without the help of a human. The lack of dependence on prior knowledge and human effort is considered a major advantage of CNNs over other approaches.

Learn more: Deep Learning Revitalizes Neural Networks to Match or Beat Humans on Complex Tasks

The Latest on: Deep Learning

[google_news title=”” keyword=”Deep Learning” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Deep Learning

- Intel quietly launched mysterious new AI CPU that promises to bring deep learning inference and computing to the edge — but you won't be able to plug them in a motherboard ...on April 27, 2024 at 5:33 am

I ntel has launched a new AI processor series for the edge, promising industrial-class deep learning inference. The new ‘Amston Lake’ Atom x7000RE chips offer up to double the cores and twice the ...

- New multi-task deep learning framework integrates large-scale single-cell proteomics and transcriptomics dataon April 26, 2024 at 7:35 am

The exponential progress in single-cell multi-omics technologies has led to the accumulation of large and diverse multi-omics datasets. However, the integration of single-cell proteomics and ...

- Europe taps deep learning to make industrial robots safer colleagueson April 26, 2024 at 1:07 am

European researchers have launched the RoboSAPIENS project to make adaptive industrial robots more efficient and safer to work with humans.

- AI-powered 'deep medicine' could transform health care in the NHS and reconnect staff with their patientson April 25, 2024 at 10:20 am

Today's NHS faces severe time constraints, with the risk of short consultations and concerns about the risk of misdiagnosis or delayed care. These challenges are compounded by limited resources and ...

- Researchers develop deep learning alternative to monitoring laser powder bed fusionon April 24, 2024 at 9:12 am

Many things can go wrong when additively manufacturing (AM) metal and without in-situ process monitoring, defects can only be detected and characterized after a product is built. Most commonly, ...

- Deep learning predicts heart arrhrythmia 30 minutes in advanceon April 23, 2024 at 4:15 am

Atrial fibrillation is the most common cardiac arrhythmia worldwide with around 59 million people concerned in 2019. This irregular heartbeat is associated with increased risks of heart failure, ...

- Researchers develop deep-learning model capable of predicting cardiac arrhythmia 30 minutes before it happenson April 22, 2024 at 1:23 pm

Atrial fibrillation is the most common cardiac arrhythmia worldwide with around 59 million people concerned in 2019. This irregular heartbeat is associated with increased risks of heart failure, ...

- Using deep learning to image the Earth's planetary boundary layeron April 18, 2024 at 1:43 pm

Although the troposphere is often thought of as the closest layer of the atmosphere to the Earth's surface, the planetary boundary layer (PBL)—the lowest layer of the troposphere—is actually the part ...

- Quantum Deep Learning: Unlocking New Frontierson April 8, 2024 at 7:35 pm

Quantum computing and deep learning have seen major breakthroughs in past decades. In recent times, the convergence of these fields has resulted in the development of quantum-inspired deep learning ...

- Top 10 Best Python Libraries for Deep Learning in 2024on March 29, 2024 at 5:00 pm

Python is recognized as one of the most commonly used programming languages worldwide, especially in the sphere of deep learning. Its adaptability and easy-to-use features make it an ideal ...

via Bing News