A smart device that translates sign language while being worn on the wrist could bridge the communications gap between the deaf and those who don’t know sign language, says a Texas A&M University biomedical engineering researcher who is developing the technology.

The wearable technology combines motion sensors and the measurement of electrical activity generated by muscles to interpret hand gestures, says Roozbeh Jafari, associate professor in the university’s Department of Biomedical Engineering and researcher at the Center for Remote Health Technologies and Systems.

Although the device is still in its prototype stage, it can already recognize 40 American Sign Language words with nearly 96 percent accuracy, notes Jafari who presented his research at the Institute of Electrical and Electronics Engineers (IEEE) 12th Annual Body Sensor Networks Conference this past June. The technology was among the top award winners in the Texas Instruments Innovation Challenge this past summer.

The technology, developed in collaboration with Texas Instruments, represents a growing interest in the development of high-tech sign language recognition systems (SLRs) but unlike other recent initiatives, Jafari’s system foregoes the use of a camera to capture gestures. Video-based recognition, he says, can suffer performance issues in poor lighting conditions, and the videos or images captured may be considered invasive to the user’s privacy. What’s more, because these systems require a user to gesture in front of a camera, they have limited wearability – and wearability, for Jafari, is key.

“Wearables provide a very interesting opportunity in the sense of their tight coupling with the human body,” Jafari says. “Because they are attached to our body, they know quite a bit about us throughout the day, and they can provide us with valuable feedback at the right times. With this in mind, we wanted to develop a technology in the form factor of a watch.”

In order to capture the intricacies of American Sign Language, Jafari’s system makes use of two distinct sensors. The first is an inertial sensor that responds to motion. Consisting of an accelerometer and gyroscope, the sensor measures the accelerations and angular velocities of the hand and arm, Jafari notes. This sensor plays a major role in discriminating different signs by capturing the user’s hand orientations and hand and arm movements during a gesture.

However, a motion sensor alone wasn’t enough, Jafari explains. Certain signs in American Sign Language are similar in terms of the gestures required to convey the word. With these gestures the overall movement of the hand may be the same for two different signs, but the movement of individual fingers may be different.

For example, the respective gestures for “please” and “sorry” and for “name” and “work” are similar in hand motion. To discriminate between these types of hand gestures, Jafari’s system makes use of another type of sensor that measures muscle activity.

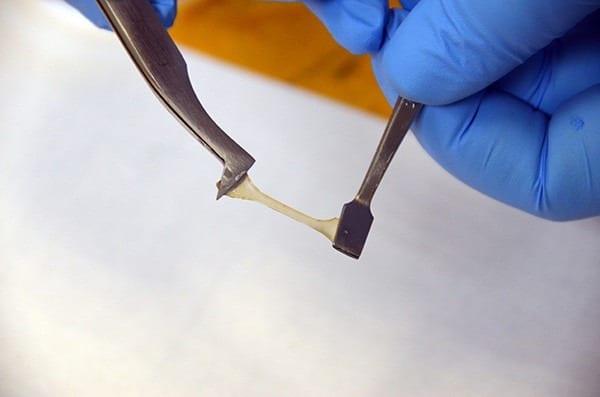

Known as an electromyographic sensor (sEMG), this sensor non-invasively measures the electrical potential of muscle activities, Jafari explains. It is used to distinguish various hand and finger movements based on different muscle activities. Essentially, it’s good at measuring finger movements and the muscle activity patterns for the hand and arm, working in tandem with the motion sensor to provide a more accurate interpretation of the gesture being signed, he says.

In Jafari’s system both inertial sensors and electromyographic sensors are placed on the right wrist of the user where they detect gestures and send information via Bluetooth to an external laptop that performs complex algorithms to interpret the sign and display the correct English word for the gesture. As Jafari continues to develop the technology, he says his team will look to incorporate all of these functions into one wearable device by combining the hardware and reducing the overall size of the required electronics. He envisions the device collecting the data produced from a gesture, interpreting it and then sending the corresponding English word to another person’s smart device so that he or she can understand what is being signed simply by reading the screen of their own device. In addition, he is working to increase the number of signs recognized by the system and expanding the system to both hands.

“The combination of muscle activation detection with motion sensors is a new and exciting way of understanding human intent with other applications in addition to enhanced SLR systems, such as home device activations using context-aware wearables,” Jafari says.

Read more: A smart device that translates sign language while being worn on the wrist

The Latest on: Context-aware wearables

[google_news title=”” keyword=”context-aware wearables” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Context-aware wearables

- Meta’s Ray-Ban Smart Glasses can now describe its surroundings with Meta AIon April 25, 2024 at 4:29 am

Meta has announced multimodal capabilities for its Ray-Ban smart glasses. With this, the smart glasses will now be able to process and comprehend its user’s surroundings. While Meta’s AI assistant was ...

- When technology can read your brain waves, who owns your thoughts?on April 18, 2024 at 10:34 am

Though the technology like this is still relatively nascent, neural rights activists and cornered lawmakers want to be ready for when it is more widespread. Critics warn companies may already possess ...

- Best smart rings 2024: Top fitness tracking and payment ringson April 16, 2024 at 5:01 pm

While some new wearable categories spring up ... To put these specs into context, industry leader Oura's Generation 3 Horizon model measures in at 2.55mm thick and clocks the scales at around ...

- Apple researchers develop AI that can ‘see’ and understand screen contexton April 1, 2024 at 4:10 am

By publishing the research, Apple is signaling its continuing investments in making Siri and other products more conversant and context-aware. Still, the researchers caution that relying on ...

- How to use Google AI Studio and access to Gemini 1.5 Proon March 31, 2024 at 5:00 pm

This feature is particularly useful for applications that require dynamic and context-aware interactions, such as virtual assistants or customer support chatbots. On the other hand, structured ...

- CES 2024: What Is Spatial Computing?on January 6, 2024 at 5:33 pm

Microsoft has defined spatial computing as the ability of devices to be aware of their surroundings ... especially in the context of today’s AI revolution. Spatial Computing is an evolving ...

- Our Tech Wearables Are Turning Into Modern Mood Ringson October 7, 2023 at 7:48 pm

While those insights can help users be more aware of their mental health ... add notes about why you feel that way. “Context is absolutely essential to interpreting anything you get from an ...

- Using social and historical contexton February 5, 2023 at 7:44 am

Looking at social and historical context means looking carefully at what was going on in society and history at the time a text was written. This can help you to understand a book and its ...

- Exploring context in fiction textson March 23, 2022 at 1:50 pm

or at least be aware, of the death of Hamlet’s father in the iconic Shakespearean tragedy. You can find wider literary context almost anywhere. Have a look at the text below, taken from Taylor ...

- Constitutionalism in Contexton February 18, 2022 at 2:13 pm

Its interdisciplinary case-study approach provides political and historical as well as legal context: each modular chapter offers an overview of a topic and a jurisdiction, followed by a case study ...

via Bing News