From performing surgery and flying planes to babysitting kids and driving cars, today’s robots can do it all.

With chatbots such as Eugene Goostman recently being hailed as “passing” the Turing test, it appears robots are becoming increasingly adept at posing as humans. While machines are becoming ever more integrated into human lives, the need to imbue them with a sense of morality becomes increasingly urgent. But can we really teach robots how to be good?

An innovative piece of research recently published in the Journal of Experimental & Theoretical Artificial Intelligence looks into the matter of machine morality, and questions whether it is “evil” for robots to masquerade as humans.

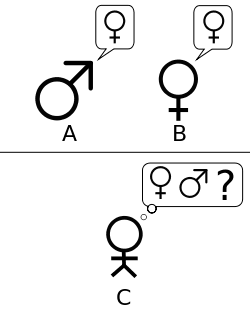

Drawing on Luciano Floridi’s theories of Information Ethics and artificial evil, the team leading the research explore the ethical implications regarding the development of machines in disguise. ‘Masquerading refers to a person in a given context being unable to tell whether the machine is human’, explain the researchers – this is the very essence of the Turing Test. This type of deception increases “metaphysical entropy”, meaning any corruption of entities and impoverishment of being; since this leads to a lack of good in the environment – or infosphere – it is regarded as the fundamental evil by Floridi. Following this premise, the team set out to ascertain where ‘the locus of moral responsibility and moral accountability’ lie in relationships with masquerading machines, and try to establish whether it is ethical to develop robots that can pass a Turing test.

Six significant actor-patient relationships yielding key insights on the matter are identified and analysed in the study. Looking at associations between developers, robots, users and owners, and integrating in the research notable examples, such as Nanis’ Twitter bot and Apple’s Siri, the team identify where ethical accountabilities lie – with machines, humans, or somewhere in between?

The Latest on: Machine ethics

[google_news title=”” keyword=”Machine ethics” num_posts=”10″ blurb_length=”0″ show_thumb=”left”]

via Google News

The Latest on: Machine ethics

- Alabama Legislative session ends with gambling bill among swath of dead billson May 9, 2024 at 8:10 pm

Gambling bill dies, as does an ethics revamp bill, while stiffer penalties for false reporting of crime is approved.

- MIT Technology Reviewon May 9, 2024 at 3:16 pm

A small brain sample was sliced into 5,000 pieces, and machine learning helped stitch it back together. When wastewater surveillance turns into a hunt for a single infected individual, the ethics get ...

- The Unstoppable Noise That Was Steve Albinion May 9, 2024 at 2:02 pm

The legendary musician, producer, and writer lived by his own fiercely stubborn code of punk DIY ethics, reigning as one of rock's most brilliant provocateurs even as he became a model for how to ...

- Analyzing the Ethics Behind Biomedical Engineeringon May 7, 2024 at 1:12 pm

The movie takes place in an unspecified future where children are ‘made’ through a eugenics-based genome engineering program that removes or limits the risk of diseases and ensures that children ...

- CNET's Best Tested Bread Machines of 2024on May 7, 2024 at 3:24 am

CNET’s expert staff reviews and rates dozens of new products and services each month, building on more than a quarter century of expertise.

- A physicists’ guide to the ethics of artificial intelligenceon May 6, 2024 at 6:00 am

Physics may seem like its own world, but different sectors using machine learning are all part of the same universe.

- Some Legal Ethics Quandaries on Use of AI, the Duty of Competence, and AI Practice as a Legal Specialtyon May 5, 2024 at 5:00 pm

Ethics rules require that a lawyer have the necessary skills ... Predictive coding is a type of AI – active machine learning for binary classifications. It is easier to use than the new LLM types of ...

- Ethics and Consciousness in Detroit: Become Humanon May 5, 2024 at 5:00 pm

Overall, “Detroit: Become Human” is a fantastic game that delves deeply into the ethics and morals not only of the player but also questions the concepts of consciousness and self-awareness in ...

- Former mayoral candidate Paul Vallas urges leniency for Ed Burke, ‘whom I always felt met my standards for ethics’on April 30, 2024 at 1:35 pm

Four letters written by Vallas and others in Burke's case became public Tuesday at the urging of the Chicago Sun-Times and Chicago Public Media. Burke is set to be sentenced June 24 following his ...

- Jack Smith Stung By Ethics Complainton April 30, 2024 at 10:07 am

Republican Congresswoman Elise Stefanik has filed a complaint to the Justice Department, accusing Smith of "trying to interfere with the 2024 election." ...

via Bing News